Detecting, Quantifying, and Attributing DeepFake Activity on Social Media The rapid rise of deepfake videos on social media has created serious risks to public trust, personal reputation, d...

Detecting, Quantifying, and Attributing DeepFake Activity on Social Media

The rapid rise of deepfake videos on social media has created serious risks to public trust, personal reputation, democratic discourse, and mental well-being. From impersonation and misinformation to harassment and fraud, deepfake content is increasingly difficult for humans to identify at scale. To address this challenge, FaceOff can be positioned as a DeepFake Analyser—a responsible AI system designed to detect, measure, and attribute deepfake video activity across digital platforms.

Rather than acting as a surveillance or censorship tool, FaceOff functions as an analytical and evidentiary platform, supporting platforms, regulators, law enforcement, and digital forensics teams.

1. Detecting DeepFake Videos at Scale

FaceOff applies multi-layered AI analysis to identify whether a video is likely authentic, manipulated, or synthetically generated.

Key Detection Capabilities

FaceOff examines deepfake indicators such as:

Each video is assigned a DeepFake Confidence Score, allowing analysts to prioritize review rather than relying on binary yes or no decisions.

2. Answering “How Many DeepFake Videos Are Circulating”

FaceOff can ingest video content from:

By continuously analyzing this content, FaceOff generates:

This enables organizations to move from anecdotal awareness to quantified intelligence on deepfake proliferation.

3. Identifying “Who Is Acting” in DeepFake Videos

One of the most critical questions in deepfake analysis is who appears to be acting in the manipulated content. FaceOff addresses this carefully and lawfully.

Identity Attribution (With Safeguards)

FaceOff does not automatically identify real individuals without authorization. Instead, it supports:

The output is an Impersonation Likelihood Report, not a definitive identity claim, ensuring legal defensibility.

4. Mapping DeepFake Actors and Networks

Beyond individual videos, FaceOff can uncover patterns and networks behind deepfake creation and dissemination.

Behavioral and Network Analysis

FaceOff correlates:

This helps identify:

5. Evidence-Grade Reporting for Platforms and Authorities

FaceOff produces forensically sound reports suitable for moderation decisions, investigations, or legal proceedings.

Report Outputs Include

These reports support content takedown, victim protection, and prosecution, while respecting due process.

6. Privacy, Ethics, and Governance Controls

Given the sensitivity of facial data and social media content, FaceOff is designed with strong safeguards:

FaceOff acts as a decision-support system, not a judge or executioner.

7. Use Cases Enabled by FaceOff DeepFake Analyser

Conclusion: From Viral Deception to Verifiable Truth

Deepfakes thrive in environments of scale, speed, and ambiguity. FaceOff counters this by bringing structure, evidence, and accountability to the digital ecosystem.

By detecting how many deepfake videos are circulating, identifying who appears to be acting in them, and mapping who is behind their creation, FaceOff transforms deepfake response from reactive panic to proactive governance.

Used responsibly, FaceOff can help restore trust in digital media—without compromising privacy, free expression, or human rights.

vity on Social Media

By Mr. Roshan Kumar Sahu, Ex-Co-founder, FaceOff Technologies As digital ecosystems expand across sectors including finance commerce healthcare education logistics and public services the need for sec...

By Mr. Roshan Kumar Sahu, Ex-Co-founder, FaceOff Technologies

As digital ecosystems expand across sectors including finance commerce healthcare education logistics and public services the need for secure trusted and accessible identity verification becomes critical. Traditional verification processes built around physical presence manual checks and legacy biometric devices are no longer sufficient. They struggle to scale they impose high friction on users and they remain vulnerable to fraud and impersonation.

FaceOff addresses this challenge by delivering advanced contactless biometric acquisition and artificial intelligence powered liveness detection across face fingerprint and iris recognition. These technologies enable accurate identity verification without physical contact or specialized hardware creating a new foundation for secure and scalable digital trust.

Contactless Biometric Capture with Artificial Intelligence Liveness Detection

FaceOff enables full contactless biometric capture through standard mobile cameras webcams and low cost imaging devices. This approach eliminates the need for fingerprint scanners dedicated hardware or physical touch points making biometric collection safe accessible and operationally efficient.

Artificial intelligence powered liveness detection validates that biometric samples are captured from a live human presence and not from photographs screens video replays or synthetic deepfake representations. This verification includes facial motion analysis depth consistency evaluation natural reflex detection skin texture modeling and micro movement validation.

This unified technological framework provides major enterprise and public sector advantages.

FaceOff contactless biometric capture delivers higher accuracy by analyzing natural biometric signals rather than surface impressions.

Lower friction user experiences remove complex enrollment steps and eliminate device dependencies allowing identity verification from anywhere.

Stronger fraud prevention is achieved by preventing impersonation attacks biometric spoofing and deepfake presentation fraud.

Compliance readiness is built directly into the platform as biometric processing follows privacy first principles and regulatory frameworks required for identity handling.

Massive scalability is enabled since capture occurs using widely available cameras and smartphones removing infrastructure limitations.

This approach establishes a new industry standard for trusted digital identity verification combining usability and security at unprecedented scale.

Verification and Authentication Across Multiple Modalities

FaceOff extends beyond basic biometric capture into a comprehensive verification and authentication ecosystem supporting face fingerprint and iris modalities.

This multi modal approach allows flexible identity workflows across both online and offline environments.

Biometric verification enables real time matching of captured biometric samples against registered identity records.

Biometric authentication provides continuous or session based confirmation that the interacting individual remains the authorized user throughout sensitive transactions or communications.

Mobile platforms support identity workflows even in low connectivity environments enabling rural and remote onboarding systems with local processing and deferred synchronization.

Centralized verification systems support large scale digital service platforms including banking recruitment logistics citizen services and healthcare credentialing.

All verification flows leverage artificial intelligence to maintain high confidence thresholds while minimizing false rejections and improving throughput.

Operational Advantages of the FaceOff Platform

FaceOff biometric technology provides transformative benefits across operational and compliance dimensions.

Stronger security is achieved by eliminating dependency on credentials documents and visual inspection methods which are easily compromised.

Higher accuracy comes from combining multiple biometric features and real time liveness detection to reduce false matches and spoofing success.

Real time validation allows immediate approval or rejection decisions avoiding workflow delays.

Rural and offline capability ensures inclusion for underserved regions through mobile based capture that does not require continuous internet access.

Compliance readiness ensures organizations meet regulatory frameworks governing biometric data personal data protection and identity authentication.

End to end fraud prevention covers the entire identity lifecycle from enrollment verification and authentication through transaction validation.

Together these features position FaceOff as a comprehensive digital trust solution rather than a single point verification tool.

Enabling National and Global Digital Transformation

FaceOff’s platform aligns directly with the objectives of India’s digital transformation agenda including financial inclusion paperless service delivery and secure digital onboarding. Its contactless mobile first approach allows identity systems to reach the deepest rural populations without requiring dedicated infrastructure.

Global adoption pathways are similarly supported as enterprises across finance ecommerce healthcare travel and logistics need digitally scalable identity systems that comply with evolving privacy regulations while protecting against growing biometric fraud threats.

The platform’s modular design allows governments and enterprises to deploy biometric verification customized to their operational risk profiles while maintaining consistent security governance.

A New Identity Verification Ecosystem

FaceOff is not merely offering biometric capture tools. It is building a comprehensive identity assurance ecosystem combining:

Contactless biometric acquisition

Artificial intelligence liveness verification

Multi modal biometric matching

Mobile and centralized identity platforms

Real time transaction authentication

Compliance reporting and auditing tools

This integrated architecture ensures that identity trust is built into digital interactions by default rather than added as a downstream control.

Conclusion

FaceOff is defining the next generation of biometric identity assurance through contactless capture artificial intelligence powered liveness detection and multi modal verification systems.

By delivering higher accuracy lower friction improved fraud protection full compliance readiness and unmatched scalability FaceOff creates a secure frictionless pathway for digital identity adoption.

This ecosystem provides governments enterprises and service platforms with the confidence to expand digital operations securely ensuring that every verified identity represents a real person participating in a trusted digital economy.

Artificial intelligence identity verification strengthens patient monitoring and prevents errors in modern hospitals Hospitals around the world are adopting advanced artificial intelligence systems t...

Artificial intelligence identity verification strengthens patient monitoring and prevents errors in modern hospitals

Hospitals around the world are adopting advanced artificial intelligence systems to monitor patient vitals in real time. These early warning systems, often called digital patient monitors, are designed to detect sudden changes in heart rate, oxygen levels, blood pressure and other critical health indicators. As these tools evolve, one technology in particular is emerging as a key safety layer. This technology is known as FaceOff.

FaceOff is an identity authentication and risk intelligence platform that works alongside patient monitoring systems to eliminate errors caused by misidentification and unauthorized access. While wireless medical sensors continuously track patient conditions, FaceOff ensures that every alert, medical decision and clinical action can be traced back to the correct verified person.

Strengthening Patient Identity and Clinical Accountability

One of the biggest challenges in modern hospitals is the risk of patient mix ups, impersonation attempts and documentation mistakes. With fast moving clinical activity and heavy workloads, even small identification errors can lead to serious consequences.

FaceOff addresses this risk by adding a biometric identity layer that verifies doctors, nurses and caregivers the moment they access patient dashboards. The system uses artificial intelligence based facial recognition along with a liveness check to confirm that the person logging into the system is exactly who they claim to be. This prevents unauthorized users from viewing or altering medical data and reduces the chance of clinical actions being assigned to the wrong staff member.

By combining real time patient monitoring with verified identity assurance, FaceOff creates complete accountability for every medical decision.

Improving Cybersecurity in Healthcare

Healthcare systems are increasingly targeted by data breaches and internal misuse. FaceOff brings an added layer of security through a real time anomaly detection engine. This engine constantly examines access behavior patterns and can flag unusual activity, irregular login attempts or suspicious interactions with patient records.

Hospitals can respond immediately to potential risks before damage occurs. In a time when cybercrime is becoming more sophisticated, this level of automated oversight is considered essential.

Faster Patient Registration and Reduced Administrative Burden

FaceOff also simplifies the admission process through what it calls a zero touch verification method. Instead of lengthy paperwork or manual identity checks, the system can verify a patient in a matter of seconds. This reduces waiting times, shortens queues and lowers the administrative workload for hospital staff.

For patients, the process feels seamless. For hospitals, it creates a more organized and efficient intake system.

A Closed Loop Intelligent Care Ecosystem

When combined with artificial intelligence based early warning systems, FaceOff creates what experts describe as a closed loop care ecosystem. Wireless sensors monitor vital signs. The early warning dashboard alerts staff to any risk. FaceOff ensures that the person responding to the alert has verified identity authentication. Every step is secure, traceable and accurate.

This reduces the possibility of human error, strengthens clinical decision making and improves overall patient safety. As hospitals move toward advanced digital care models, technologies like FaceOff are expected to play a central role.

A Foundation for the Future of Healthcare

Healthcare leaders say that the future of medicine will depend on strong identity systems that protect both patients and staff. With the increasing use of automation, artificial intelligence and remote monitoring, the need for trustworthy verification is greater than ever.

FaceOff positions itself as that trust layer. It promises reliable identity confirmation, better cybersecurity, faster workflows and complete transparency in clinical operations.

As hospitals aim for safer and more autonomous healthcare delivery, FaceOff is poised to become one of the most important technologies supporting next generation patient care.

FaceOff’s Human-in-the-Loop (HITL) framework seamlessly aligns with the company’s Innovation Stone philosophy, which emphasizes that true intelligence must emerge from the fusion of human...

FaceOff’s Human-in-the-Loop (HITL) framework seamlessly aligns with the company’s Innovation Stone philosophy, which emphasizes that true intelligence must emerge from the fusion of human cognition and machine precision. Within the FaceOff ecosystem, HITL plays a foundational role by ensuring that every stage of AI reasoning—whether in multimodal perception, identity understanding, fraud detection, behavioral analysis, or cognitive decisioning—is shaped not only by algorithms but also by human judgment, ethical oversight, and domain expertise.

While the Adaptive Cognito Engine (ACE) autonomously analyzes visual signals, vocal patterns, micro-expressions, digital behavior, and contextual cues to generate real-time intelligence, HITL ensures that specialists continuously validate ambiguous outcomes, correct biases, label edge-case scenarios, and calibrate critical thresholds, making FaceOff’s intelligence inherently fair, contextual, and explainable.

This synergy becomes even more significant as it powers FaceOff’s Agentic Adaptive RAG, enabling the system to evolve dynamically based not only on data but also on human intuition and real-world experience. Every time a human reviewer refines a prediction, adds contextual reasoning, or corrects a model’s inference, FaceOff’s cognitive layer becomes sharper, culturally aware, and better aligned with sector-specific realities. In BFSI, for example, HITL ensures accurate fraud risk scoring by enabling human experts to validate behavioral anomalies such as mule account patterns, synthetic identity inconsistencies, or suspicious video-KYC cues, preventing false positives that could disrupt legitimate customers and ensuring compliance with RBI, MAS, DFSA, or FCA regulations.

In healthcare, HITL becomes essential for reviewing diagnostic decision support outputs—allowing clinicians to confirm symptom-behavior correlations, verify identity during telemedicine sessions, and validate AI-generated alerts in remote patient monitoring, ensuring patient safety and ethical accuracy in high-risk environments. In gov-tech, HITL strengthens digital public-service platforms by enabling officers to oversee e-governance identity checks, validate citizen behavior analytics during welfare disbursements, and supervise AI-based document or face verification during national ID services, ensuring transparency and minimizing exclusion errors. In defense and national security, HITL empowers intelligence officers to validate AI-interpreted threat cues such as stress indicators, deception signals, anomalous facial behavior, or multimodal reconnaissance data, ensuring that cognitive intelligence is used responsibly during interrogations, border control operations, cyber-ops, and mission-critical threat analysis.

Instead of treating AI as a closed black box, FaceOff’s HITL framework transforms every cognitive pipeline into a transparent, auditable, continuously improving intelligence fabric where humans guide the trajectory of machine reasoning. The higher the stakes, the deeper the integration of human oversight—ensuring that automated systems do not misinterpret cultural nuances, behavioral variances, or sensitive situational contexts. In detection workflows for BFSI fraud, healthcare risk, government identity assurance, and defense security, HITL prevents misclassification, reduces operational risk, and enhances regulatory trust by ensuring that every automated decision is reviewed when necessary by specialists who understand the ethical, legal, and situational implications.

Ultimately, HITL turns FaceOff’s AI from being merely automated into a human-validated cognitive partner—one that identifies patterns but also explains them, predicts outcomes but also justifies them, scales operations but always respects human context. This balance of automation and human conscience reinforces FaceOff’s mission of delivering “Intelligence You Can Trust,” ensuring that every output is explainable, every insight verifiable, every alert contextual, and every decision shaped by the combined strengths of adaptive cognitive AI and real human judgment across BFSI, healthcare, government, and defense environments.

As global technology leaders such as Apple, Meta, Google, Samsung, and Xiaomi compete to define the next generation of wearable devices, the industry’s competitive advantage is shifting from har...

As global technology leaders such as Apple, Meta, Google, Samsung, and Xiaomi compete to define the next generation of wearable devices, the industry’s competitive advantage is shifting from hardware performance to cognitive intelligence.

Apple’s upcoming AirPods Pro (2026), featuring built in infrared cameras, marks a major leap toward sensory aware wearables. Yet the true transformation lies not in sensing alone but in enabling devices that can comprehend behavior, emotion, and intent in real time — wearables that understand the human experience rather than merely capturing it.

At the forefront of this evolution stands FaceOff Technologies Inc., a Delaware based deep tech company pioneering the Adaptive Cognito Engine (ACE) — a modular cognitive middleware that powers emotionally intelligent, privacy preserving, and quantum secure AI wearables.

The Market Shift: From Sensors to Sentience

Traditional wearables such as smartwatches, earbuds, and AR glasses excel at data collection but lack contextual awareness. Apple’s infrared enabled AirPods represent a new era of perceptual computing, enabling spatial mapping, gesture recognition, and environmental awareness.

However, these sensors produce massive streams of visual, biometric, and behavioral data that are meaningless without intelligent interpretation.

FaceOff’s ACE framework transforms sensor data into meaningful cognitive insights — identifying emotional tone from voice, detecting stress from microexpressions, and even measuring trust levels in digital communication.

The ACE Framework: Intelligence Beyond Hardware :

ACE serves as a cognitive operating layer that delivers perception, reasoning, memory, and trust directly within the device. Its innovation pillars includes:

1. Multimodal Sensor Fusion Combines camera, audio, and biometric signals to interpret emotion, context, and intent, enabling adaptive acoustic environments, fatigue awareness, and cognitive wellness analytics.

2. Privacy First On Device AI Uses Federated Learning and Quantum Neuro Cryptography (QNC) to process data locally and secure all transactions against quantum level threats, preserving user privacy and global data compliance.

3. TAP Storage and Quantum Safe Storage (QSS) Introduces Trusted Adaptive Persistent Storage, an intelligent edge vault where sensitive biometric and emotional data are stored with adaptive encryption that evolves with threat landscapes.Paired with Quantum Safe Storage, FaceOff employs post quantum algorithms to ensure data integrity against quantum decryption attacks. Together, TAP and QSS create a continuously self protecting memory layer for all wearable devices using ACE.

4. Cognitive Health and Trust Analytics Derives holistic behavioral trust and wellness scores from real time multimodal input, allowing devices to respond empathetically to stress or anxiety and evolve into emotionally intelligent assistants.

5. Agentic Adaptive RAG (Retrieval Augmented Generation) Combines contextual memory, reasoning, and conversational recall to create self evolving AI that understands past user states and adapts to long term behavioral patterns.

Apple’s Hardware Meets FaceOff’s Cognition:

Apple’s infrared enabled AirPods redefine sensory capability, while FaceOff enhances them with cognitive understanding.

Together, they bridge the gap between perception and comprehension.

• Apple contributes the hardware foundation: advanced sensors, secure silicon, and spatial compute architecture.

• FaceOff provides the cognitive and trust layer: emotional reasoning, ethical awareness, and post quantum secure intelligence.

This combination transforms a wearable device into a trusted digital twin, capable of empathetic response, contextual adaptation, and self defending communication.

The Industry Ripple Effect:

Following Apple’s lead, Meta with AR and VR wearables, Google with the Pixel ecosystem, Samsung with Galaxy AI devices, and Xiaomi with accessible smart wearables are converging toward multimodal architectures.

However, without advanced AI middleware such as FaceOff’s ACE, these ecosystems risk remaining intelligent but not truly cognitive.

FaceOff positions itself as the Intel Inside of cognitive wearables — the embedded intelligence that brings human like understanding, emotion detection, and quantum safe trust computation to any device or ecosystem.

The Future: From Data to Digital Consciousness:

The race for leadership in next generation wearables is no longer about producing smaller chips or sleeker designs but about building the most intelligent and trusted interface between humans and machines.

With Apple advancing hardware based sensing and FaceOff Technologies advancing cognitive interpretation and quantum secure storage, a new paradigm is emerging:

AI that does not just sense or respond but truly understands and protects.

As Meta, Google, Samsung, and Xiaomi evolve their multimodal ecosystems, integrating ACE with TAP Storage and Quantum Safe Storage will be essential to achieve trusted, empathetic, and resilient wearables that define the next era of human technology symbiosis.

FaceOff Technologies Inc. stands at the convergence of AI cognition, privacy, and post quantum security — the invisible intelligence powering tomorrow’s digital consciousness.

A recent experiment has sent shockwaves through the global identity verification industry as a researcher successfully created an AI generated passport that bypassed a live KYC (Know Your Customer) ve...

A recent experiment has sent shockwaves through the global identity verification industry as a researcher successfully created an AI generated passport that bypassed a live KYC (Know Your Customer) verification system. The incident reveals how generative AI can now fabricate hyper realistic synthetic identities capable of deceiving legacy verification systems, posing a serious threat to digital trust and financial security.

Using advanced generative tools such as OpenAI’s GPT 4o in combination with image synthesis models, the researcher produced a lifelike counterfeit passport and a perfectly matching AI generated selfie in just a few minutes. This process, which earlier required extensive skill and effort, has now become alarmingly simple. When the fabricated identity was submitted to a platform relying only on visual KYC checks, the system authenticated it without raising any suspicion. This incident exposes a fundamental flaw — many KYC systems were built for a pre generative AI era and cannot handle the sophistication of modern synthetic fraud.

Traditional KYC systems that depend on static photo uploads, basic selfie comparisons, or rule based automation are becoming obsolete. Deepfake and diffusion based image generators can now produce facial imagery that is virtually indistinguishable from real photographs. Systems that only verify documents without checking against official government records or cross validating liveness data are particularly at risk. Even liveness tests such as blink or head turn prompts can be easily bypassed using advanced neural rendering and AI powered motion synthesis.

FaceOff Technologies Multi Layer Defense

To combat these evolving challenges, FaceOff Technologies has developed a multi layer verification framework designed specifically for the generative AI era. Its Adaptive Cognito Engine (ACE) conducts real time multimodal analysis by combining facial, audio, behavioral, and biometric signals to authenticate genuine identities.

The facial trust engine within ACE performs precise cross matching between live video feeds and official identity documents using spatial, temporal, and frequency based feature mapping. Advanced liveness detection modules examine microexpressions, remote photoplethysmography (rPPG), head pose behavior, and gaze entropy to accurately identify deepfakes and replayed content.

Each KYC session is geo tagged, time stamped, and cryptographically recorded for traceability and regulatory compliance. Automated optical character recognition and document validation through secure APIs enable rapid and secure onboarding while adhering to zero trust security principles.

FaceOff’s architecture fully aligns with RBI Video KYC regulations, CERT In cybersecurity guidelines, and MeitY data protection requirements. By fusing behavioral biometrics with explainable multimodal intelligence, FaceOff delivers next generation security and transparency that traditional KYC systems cannot match.

In essence, FaceOff restores digital trust in an era dominated by synthetic media and AI driven deception by ensuring that every verified identity reflects authentic human consistency across multiple behavioral and physiological layers.

AI-based deepfake detection uses algorithms like CNNs and RNNs to spot anomalies in audio, video, or images—such as irregular lip-sync, eye movement, or lighting. As deepfakes grow more sophisti...

AI-based deepfake detection uses algorithms like CNNs and RNNs to spot anomalies in audio, video, or images—such as irregular lip-sync, eye movement, or lighting. As deepfakes grow more sophisticated, detection remains challenging, requiring constantly updated models, diverse datasets, and a hybrid approach combining AI with human verification to ensure accuracy.

Deepfake technology is rapidly advancing, with models like StyleGAN3 and diffusion-based methods reducing detectable artifacts. Detection systems face issues like false positives from legitimate edits and false negatives from subtle fakes. Additionally, biased or limited training data can hinder accuracy across diverse faces, lighting, and resolutions.

The Enterprise Edition of ACE (Adaptive Cognito Engine) is a mobile-optimized AI platform that delivers real-time trust metrics using multimodal analysis of voice, emotion, and behavior to verify identity and detect deepfakes with adversarial robustness.

Scenario: A bank receives a video call from someone claiming to be a CEO requesting a large fund transfer. The call is suspected to be a deepfake.

Detection Process:

The bank’s AI-driven fraud system analyzes videos using CNN to detect facial blending, RNN to spot irregular blinking, and audio-lip sync mismatches. With a 95% deepfake probability, a human analyst confirms the fraud, halting the transfer.

Deeptech Startup Faceoff technologies brings, A hardware appliance, the FOAI Box, will provide plug-and-play deepfake and synthetic fraud detection directly at the edge or within enterprise networks&m...

Deeptech Startup Faceoff technologies brings, A hardware appliance, the FOAI Box, will provide plug-and-play deepfake and synthetic fraud detection directly at the edge or within enterprise networks—eliminating the need for cloud dependency. Designed for enterprise and government use, it will be available as a one-time purchase with no recurring costs.

This makes FOAI:

| Layer | Description |

|---|---|

| Edge AI Module | On-device inference engines for 8-model FOAI stack (emotion, sentiment, deepfake, etc.) |

| TPU/GPU Optimized | Hardware accelerated inference for real-time video processing |

| Secure Enclave | Cryptographic core to protect inference logs & model parameters |

| APIs & SDKs | Custom API endpoints to integrate with enterprise infrastructure |

| Firmware OTA Support | Update models & signatures periodically without compromising privacy |

The rise of deepfakes and synthetic fraud poses unprecedented challenges to trust and security across industries like government, banking, defense, and healthcare. To address this, the vision for a Deepfake Detection-as-a-Service (DaaS) in a Box, or FOAI Box, is to deliver a plug-and-play hardware appliance that provides ultra-secure, low-latency, and scalable deepfake detection at the edge or within enterprise networks, eliminating reliance on cloud infrastructure.

The FOAI Box aims to redefine fraud-oriented AI (FOAI) by offering a standalone, hardware-based solution for detecting deepfakes and synthetic fraud in real time. Unlike cloud-based systems, which risk data breaches and latency, the FOAI Box operates locally, ensuring:

The FOAI Box addresses critical gaps in deepfake detection, a pressing issue as 70% of organizations reported deepfake-related fraud attempts in 2024 (per Deloitte). Its edge-based, cloud-independent design mitigates risks of data breaches, a concern highlighted by recent Mumbai bomb threat hoaxes and the need for secure systems in sensitive sectors. By offering a scalable, plug-and-play solution, the FOAI Box aligns with global digital-first trends:

The FOAI Box positions itself as a game-changer in the $10 billion deepfake detection market (projected by 2030). Future iterations could incorporate:

Social media companies are battling an avalanche of synthetic content: Deepfake videos spreading misinformation, character assassinations, scams, and manipulated news. Faceoff provides a plu...

Social media companies are battling an avalanche of synthetic content: Deepfake videos spreading misinformation, character assassinations, scams, and manipulated news. Faceoff provides a plug-and-play solution.

Faceoff empowers platforms with proactive synthetic fraud mitigation using AI that thinks like a human — and checks if the video does too.

Video KYC is vital for regulated entities (financial, telecom) to verify identities remotely, ensuring RBI compliance and fraud prevention. Faceoff AI (FOAI) significantly enhances this by using advan...

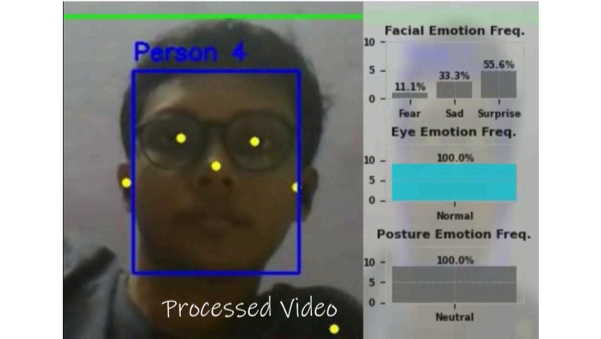

Video KYC is vital for regulated entities (financial, telecom) to verify identities remotely, ensuring RBI compliance and fraud prevention. Faceoff AI (FOAI) significantly enhances this by using advanced Emotion, Posture, and Biometric Trust Models during 30-second video interviews. FOAI's technology detects deception and verifies identity in real-time. This strengthens video KYC, especially in combating fraudulent health claims and identity fraud in immigration and finance, by offering a more robust and insightful verification method beyond traditional checks.

Video KYC is fast becoming a norm in digital banking and fintech, but traditional Video KYC checks often fail to validate authenticity, emotional cues, and AI-synthesized manipulations.

All data is processed in the client’s own cloud environment via API — ensuring GDPR and privacy compliance, while Faceoff only tracks API usage count, not personal data.

Faceoff AI’s enhanced video KYC solution revolutionizes identity verification by integrating Emotion, Posture, and Biometric Trust Models to detect fraud and verify health claims. Its ability to flag deception through micro-expressions, biometrics, and posture offers a non-invasive, efficient tool for financial institutions and immigration authorities. While challenges like deepfake resistance, cultural variability, and privacy concerns exist, FOAI’s scalability, compliance, and fraud deterrence potential make it a game-changer. With proper implementation and safeguards, FOAI can streamline KYC processes, reduce fraud, and enhance trust in digital onboarding and immigration systems.

Building a robust partner ecosystem involves collaborating with hardware manufacturers, system integrators, and technology providers to enhance FOAI’s capabilities. detailed analysis of how FOAI...

Building a robust partner ecosystem involves collaborating with hardware manufacturers, system integrators, and technology providers to enhance FOAI’s capabilities. detailed analysis of how FOAI can establish partnerships and integrate with the hardware ecosystem, focusing on its application in immigration and financial sectors, drawing on the provided context and general principles of technology ecosystem partnerships.

Example: Cisco, Juniper, HPE Aruba

Example: Palo Alto Networks, Crowdstrike, Zscaler, Checkpoint, Fortinet

Example: Lenovo, HP, Dell, Samsung (laptop & mobile OEMs)

Strategic partnerships with OEMs, IoT providers, and system integrators enable FOAI to deliver seamless solutions for financial institutions and immigration agencies. By leveraging APIs, edge computing, and certified devices, FOAI can address challenges like compatibility and privacy while maximizing market reach and innovation.

With an objective is to Secure, Inclusive, and Deepfake-Resilient Air Travel. DigiYatra aims to enable seamless and paperless air travel in India through facial recognition. While ambitious and aligne...

With an objective is to Secure, Inclusive, and Deepfake-Resilient Air Travel. DigiYatra aims to enable seamless and paperless air travel in India through facial recognition. While ambitious and aligned with Digital India, the existing Aadhaar-linked face matching system suffers from multiple real-world limitations, such as failure due to aging, lighting, occlusions (masks, makeup), or data bias (skin tone, gender transition, injury). As digital threats like deepfakes and synthetic identity fraud rise, there is a clear need to enhance DigiYatra’s verification framework.

Faceoff, a multimodal AI platform based on 8 independent behavioral, biometric, and visual models, provides a trust-first, privacy-preserving, and adversarially robust solution to these challenges. It transforms identity verification into a dynamic process based on how humans behave naturally, not just how they look.

| Limitation | Cause | Consequence |

|---|---|---|

| Aging mismatch | Static template | Face mismatch over time |

| Low lighting or occlusion | Poor camera conditions | False rejections |

| Mask, beard, or makeup | Geometric masking | Matching failures |

| Data bias | Non-diverse training | Exclusion of minorities |

| Deepfake threats | No real-time liveness detection | Risk of impersonation |

| Static match logic | No behavior or temporal features | No insight into intent or authenticity |

Faceoff runs the following independently trained AI models on-device (or on a secure edge appliance like the FOAI Box): Each model provides a score and anomaly likelihood, fused into a Trust Factor (0–10) and Confidence Estimate.

Rather than a binary face match vs. Aadhaar, Faceoff generates a holistic trust score using:

For airports, Faceoff can run on a plug-and-play appliance (FOAI Box) that offers:

| Problem | DigiYatra Fails Because | Faceoff Handles It Via |

|---|---|---|

| Aged face image | Static Aadhaar embedding | Dynamic temporal trust from gaze/voice |

| Occlusion (mask, beard) | Facial geometry fails | Biometric + behavioral fallback |

| Gender transition | Morphs fail match | Emotion + biometric stability |

| Twins or look-alikes | Same facial features | Unique gaze/heart/audio patterns |

| Aadhaar capture errors | Poor quality | Real-time inference only |

| Low lighting | Camera fails to extract points | GAN + image restoration |

| Child growth | Face grows but is genuine | Entropy and voice congruence validation |

| Ethnic bias | Under-represented groups | Model ensemble immune to bias |

| Impersonation via video | No liveness check | Deepfake & speech sync detection |

| Emotionless spoof | Static face used | Microexpression deviation flags alert |

They are justifiable via:

Faceoff can robustly address the shortcomings of Aadhaar-based facial matching by using its 8-model AI stack and multimodal trust framework to provide context-aware, anomaly-resilient identity verification. Below is a detailed discussion on how Faceoff can mitigate each real-world failure case, improving DigiYatra’s reliability, security, and inclusiveness:

Problem Statement: Traditional face matchers use static embeddings from a single model, which degrade with age.

Faceoff Solution:

Problem Statement: Facial recognition fails if the person grows a beard, wears makeup, etc.

Faceoff Solution:

Problem Statement: Surgery or injury changes facial geometry.

Faceoff Solution:

Problem Statement: Face match fails due to blurry or dim live image.

Faceoff Solution:

Problem Statement: Face shape changes drastically from child to adult.

Faceoff Solution:

Problem Statement: Covering parts of the face makes recognition unreliable.

Faceoff Solution:

Problem Statement: Facial recognition may confuse similar-looking people.

Faceoff Solution:

Problem Statement: Bad quality Aadhaar image affects facial match.

Faceoff Solution:

Problem Statement: Face models trained on skewed datasets may have racial bias.

Faceoff Solution:

Problem Statement: Appearance may shift drastically post-transition.

Faceoff Solution:

| Issue | Why Aadhaar Fails | Faceoff Countermeasure |

|---|---|---|

| Aging | Static template mismatch | Live behavioral metrics (rPPG, gaze) |

| Appearance Change | Geometry drift | Multimodal verification |

| Injury/Surgery | Facial landmark mismatch | Voice & physiology verification |

| Low Light | Poor capture | GAN restoration + biometric fallback |

| Age Shift | Face morph | Temporal entropy & voice |

| Occlusion | Feature hiding | Non-visual trust signals |

| Twins | Same facial data | Biometric/behavioral divergence |

| Bad Aadhaar image | Low quality | Real-time fusion scoring |

| Ethnic Bias | Dataset imbalance | Invariant biometric/voice/temporal AI |

| Gender Transition | Appearance change | Behaviorally inclusive AI |

Faceoff computes Trust Factor using a weighted fusion of the following per-model confidence signals:

All of these are statistically fused (e.g., via Bayesian weighting) and compared against real-world baselines, producing a 0–10 Trust Score.

Higher Trust = More Human, Natural, and Honest.

Low Trust = Possibly Fake or Incongruent.

In today’s deepfake-driven digital landscape, FaceOff Technologies (FO AI) offers a vital solution for building corporate trust. Through its proprietary Opinion Management Platform (Trust Factor...

In today’s deepfake-driven digital landscape, FaceOff Technologies (FO AI) offers a vital solution for building corporate trust. Through its proprietary Opinion Management Platform (Trust Factor Engine) and Smart Video capabilities, FO AI enables businesses, partners, celebrities, and HNIs to collect verified, video-based customer feedback, enhancing service quality and brand credibility.

With 61% of people wary of AI systems (KPMG 2023), authentic feedback has become essential. FO AI’s Trust Factor Engine detects deepfakes in real-time by analyzing micro-expressions, voice inconsistencies, and behavioral cues, ensuring authenticity.

Smart Video technology allows full customization—editing video duration, adding headlines, subheadings, and titles—to maximize social media engagement and brand reach. Applicable across industries like retail, hospitality, and finance, verified video feedback delivers deeper customer insights, strengthens trust, and amplifies customer engagement.

Corporates can unlock FO AI’s full potential by integrating it with CRM systems, launching pilot video campaigns, training teams for trust-centric communication, and utilizing its analytics for a feedback-driven culture.

As AI reshapes industries, trust is paramount. FO AI empowers businesses to combat misinformation and deliver authentic, high-impact customer experiences in an increasingly skeptical digital world.

1. Executive Summary & Introduction 1.1. Unique Challenges of Puri Pilgrimage Security: The Puri Ratha Yatra, daily temple operations at the Shree Jagannath Mandir, and the management of vast...

1. Executive Summary & Introduction

1.1. Unique Challenges of Puri Pilgrimage Security:

The Puri Ratha Yatra, daily temple operations at the Shree Jagannath Mandir, and the management of vast numbers of pilgrims present unique and immense security, safety, and crowd management challenges. These include preventing stampedes, managing dense crowds in confined spaces, identifying individuals under distress or posing a threat, ensuring the integrity of queues, and protecting critical infrastructure and VIPs. Traditional surveillance often falls short in proactively identifying and responding to the subtle behavioral cues that precede major incidents.

1.2. The Faceoff AI Solution Proposition:

This proposal details the application of Faceoff's Adaptive Cognito Engine (ACE), a sophisticated multimodal AI framework, to provide a transformative layer of intelligent security and management for the Puri Ratha Yatra, the Jagannath Mandir complex, and associated pilgrimage activities. By analyzing real-time video (and optionally audio) feeds from existing and new surveillance infrastructure, Faceoff AI aims to provide security personnel and temple administration with:

This solution is designed with privacy considerations and aims to augment human capabilities for a safer and more secure pilgrimage experience.

For this specific context, the following ACE modules are paramount:

Trust Fusion Engine: Aggregates outputs into a "Behavioral Anomaly Score" or "Risk Index" for individuals/crowd segments, and an "Emotional Atmosphere Index" for specific zones.

1. Executive Summary & Introduction 1.1. Challenges in Bus Transportation: The bus transportation sector, a vital component of urban and intercity mobility, faces persistent challenges related...

1. Executive Summary & Introduction

1.1. Challenges in Bus Transportation:

The bus transportation sector, a vital component of urban and intercity mobility, faces persistent challenges related to driver fatigue and distraction, passenger safety (assaults, altercations, medical emergencies), fare evasion, operational efficiency, and ensuring the integrity of incidents when they occur. Traditional CCTV systems are primarily reactive, offering post-incident review capabilities but limited proactive intervention.

1.2. The Faceoff AI Solution Proposition:

Faceoff's Adaptive Cognito Engine (ACE), a multimodal AI framework, offers a transformative solution by providing real-time behavioral and physiological analysis within buses and at terminals. By integrating Faceoff with existing or new in-vehicle and station camera systems, transport operators can proactively identify risks, enhance safety for drivers and passengers, improve operational oversight, and gather objective data for incident management and service improvement. This document details the technical implementation and use cases of Faceoff AI in the bus transportation sector.

For bus environments, specific ACE modules will be prioritized:

In-Vehicle System ("Faceoff Bus Guardian"):

Driver Alert System (Optional): Small display, audible alarm, or haptic feedback device (e.g., vibrating seat) to alert the driver to their own fatigue/distraction or a critical cabin event if direct intervention is possible.

Real-Time Alert Transmission:

Batch Data Upload (Optional): Non-critical aggregated data or full incident videos (for confirmed alerts) can be uploaded in batches when the bus returns to the depot or during off-peak hours to manage data costs.

Use Case: Real-Time Driver Drowsiness and Distraction Detection.

Use Case: Driver Stress and Health Monitoring.

Technical Depth: Facial emotion (anger, stress), vocal tone (if driver-mic available), rPPG (heart rate variability), and SpO2 are analyzed for signs of acute stress, agitation, or potential medical emergencies (e.g., cardiac event).

Implementation: Alerts command center to unusual driver physiological or emotional states.

Benefit: Allows for timely intervention in case of driver health issues or extreme stress, preventing potential incidents.

With the introduction of facial recognition for cash withdrawals across the country wide ATM networks with significant leap in banking accessibility and security. This initiative, potentially leveragi...

With the introduction of facial recognition for cash withdrawals across the country wide ATM networks with significant leap in banking accessibility and security. This initiative, potentially leveraging the Aadhaar ecosystem for seamless cardless transactions and supporting services like video Know Your Customer (KYC) and account opening, sets the stage for further innovation. However, as facial recognition becomes mainstream, the sophistication of fraud attempts, including presentation attacks (spoofing) and identity manipulation, will inevitably increase.

"Faceoff AI," with its advanced multimodal Adaptive Cognito Engine (ACE), offers a unique opportunity to integrate with existing infrastructure, providing a robust next-generation layer of trust, liveness detection, and behavioral intelligence. This will not only fortify security but also enhance the user experience by ensuring genuine interactions are swift and secure.

Faceoff AI's 8 independent modules (Deepfake Detection, Facial Emotion, FETM Ocular Dynamics, Posture, Speech Sentiment, Audio Tone, rPPG Heart Rate, SpO2 Oxygen Saturation) will be integrated to augment of the respective existing ATM functionalities.

By integrating Faceoff AI's advanced multimodal capabilities, ATM network of the bank can significantly elevate the security, trustworthiness, and user experience of its facial recognition ATM network. This collaboration will not only provide robust defense against current and future fraud attempts, including sophisticated deepfakes and presentation attacks, but also enable more intuitive and supportive customer interactions. This position of the Bank at the vanguard of AI-driven innovation in the Indian BFSI sector, paving the way for a new standard in secure, cardless, and intelligent self-service banking.

While specific technical details about Faceoff Technologies (FO AI) technology are not publicly detailed in available sources, we can infer its potential role based on its described function as a mult...

While specific technical details about Faceoff Technologies (FO AI) technology are not publicly detailed in available sources, we can infer its potential role based on its described function as a multi-model AI for deepfake detection and trust factor assessment. Below, I outline how such a technology could theoretically improve efficiency for Facebook (Meta) users, particularly in the context of the TAKE IT DOWN Act and Meta’s content ecosystem:

While Meta’s content amplification drives engagement, it can exacerbate the spread of deepfakes, as seen in past controversies over misinformation. The TAKE IT DOWN Act addresses this by enforcing accountability, but relying solely on legislation may be insufficient without technological solutions. FO AI detection offers a proactive approach, but its effectiveness depends on Meta’s willingness to prioritize user safety over algorithmic reach. The opposition from Reps. Massie and Burlison highlights concerns about overregulation, suggesting that voluntary adoption of technologies like Faceoff could balance innovation with responsibility. FO AI deepfake detection technology could significantly enhance efficiency for Meta users by streamlining content verification, improving safety, reducing moderation burdens, and empowering decision-making. Integrated with Meta’s AI ecosystem and aligned with the TAKE IT DOWN Act, it could create a safer, more efficient user experience. However, successful implementation requires addressing technical, privacy, and commercial challenges. For more details on Meta’s AI initiatives, visit https://about.meta.com. For information on the TAKE IT DOWN Act, refer to official congressional records. Faceoff Technologies Inc. (e.g., its AI models, processing speed, or integration capabilities) or want to explore a particular aspect (e.g., user interface design, cost implications). The mock-up of how Faceoff’s trust factor score might appear in Facebook’s UI if you confirm you’d like an image.

Executive Summary & Introduction Unique Challenges of Puri Pilgrimage Security: The Puri Ratha Yatra, daily temple operations at the Shree Jagannath Mandir, and the management of vast numbers...

Executive Summary & Introduction

Unique Challenges of Puri Pilgrimage Security:

The Puri Ratha Yatra, daily temple operations at the Shree Jagannath Mandir, and the management of vast numbers of pilgrims present unique and immense security, safety, and crowd management challenges. These include preventing stampedes, managing dense crowds in confined spaces, identifying individuals under distress or posing a threat, ensuring the integrity of queues, and protecting critical infrastructure and VIPs. Traditional surveillance often falls short in proactively identifying and responding to the subtle behavioral cues that precede major incidents.

The Faceoff AI Solution Proposition:

This proposal details the application of Faceoff's Adaptive Cognito Engine (ACE), a sophisticated multimodal AI framework, to provide a transformative layer of intelligent security and management for the Puri Ratha Yatra, the Jagannath Mandir complex, and associated pilgrimage activities. By analyzing real-time video (and optionally audio) feeds from existing and new surveillance infrastructure, Faceoff AI aims to provide security personnel and temple administration with:

This solution is designed with privacy considerations and aims to augment human capabilities for a safer and more secure pilgrimage experience.

Trust Fusion Engine: Aggregates outputs into a "Behavioral Anomaly Score" or "Risk Index" for individuals/crowd segments, and an "Emotional Atmosphere Index" for specific zones.

Network Infrastructure:

Ethical Considerations & Privacy Safeguards:

Objective: Practical Augmentation of Polygraph Examinations To provide polygraph examiners with actionable, AI-driven behavioral and non-contact physiological insights that complement traditional p...

Objective: Practical Augmentation of Polygraph Examinations

To provide polygraph examiners with actionable, AI-driven behavioral and non-contact physiological insights that complement traditional polygraph data, thereby improving the ability to:

During a polygraph examination, the subject is typically seated and video/audio recorded. Faceoff ACE would analyze this recording.

1. Facial Emotion Recognition Module (Micro-expressions Focus):

2. Eye Tracking Emotion Analysis Module (FETM):

3. Posture-Based Behavioral Analysis Module:

4. Heart Rate Estimation via Facial Signals (rPPG):

5. Speech Sentiment Analysis Module:

6. Audio Tone Sentiment Analysis Module:

7. Oxygen Saturation Estimation (SpO2) Module (Experimental):

Integration with Polygraph Examiner's Workflow:

The Faceoff AI system would be presented as an investigative aid providing correlative indicators, not as a standalone "lie detector" or a replacement for the comprehensive judgment of a trained polygraph examiner. Its results would be one part of the total evidence considered. Validation studies comparing polygraph outcomes with and without Faceoff augmentation would be essential for establishing its practical utility and admissibility.

In an era defined by heightened surveillance needs, the proliferation of digital misinformation, and ever-evolving security threats, conventional monitoring systems are proving insufficient. Faceoff A...

In an era defined by heightened surveillance needs, the proliferation of digital misinformation, and ever-evolving security threats, conventional monitoring systems are proving insufficient. Faceoff AI Smart Spectacles address this critical gap by offering an advanced, AI-driven trust assessment solution. Leveraging multimodal intelligence from eight integrated AI models, these smart spectacles deliver real-time, high-accuracy behavioral and physiological insights directly to the wearer and connected command centers.

This proposal outlines the concept, technology, use cases, and strategic advantages of deploying Faceoff AI Smart Spectacles, particularly for national security, law enforcement, and enterprise security applications. Our solution moves beyond simple binary detection (real/fake, truth/lie) to provide granular, human-like evaluations of emotional and behavioral authenticity, ensuring a proactive, tech-enabled, and intelligence-driven future.

The digital age has brought unprecedented connectivity but also new vulnerabilities. The ability to synthetically manipulate media (deepfakes) and the speed at which misinformation can spread demand a new paradigm in trust and security. Frontline personnel in law enforcement, defense, and critical infrastructure security require tools that can assess situations and individuals quickly, accurately, and discreetly. Faceoff AI Smart Spectacles are engineered to meet this demand, transforming standard eyewear into a powerful on-the-move intelligence gathering and trust assessment terminal.

At the heart of the Faceoff AI Smart Spectacles is the Trust Factor Engine, powered by 8 integrated AI models that span vision, audio, and physiological signal analysis. This engine provides a holistic understanding of human behavior and content authenticity:

Unlike traditional systems, Faceoff assigns trust scores on a scale of 1 to 10, offering far more granular and human-like evaluations.

The deployment of Faceoff’s Smart Spectacle system can be transformative:

To convert this concept into an actual product prototype, we propose collaboration with:

Faceoff AI Smart Spectacles fuse cutting-edge AI with real-world practicality, offering a paradigm shift from reactive surveillance to proactive, intelligence-driven security. It provides not just data, but behavioral context, emotional depth, and trust quantification – all delivered in real-time and with full privacy-compliance. As India and the world face rising cyber and physical security threats, tools like the Faceoff AI Smart Spectacle will be vital in shaping a proactive, tech-enabled, and intelligence-driven future for law enforcement, defense, and enterprise security, ultimately enhancing the safety and security of our communities and nation.

The state of art technology of FaceOff, AI powered behavioural biometric authentication to prevent fraud across platforms like PayTM, BharatPe, GPay, UPI 123 Pay, NEFT and RTGS, ensuring real-time, se...

The state of art technology of FaceOff, AI powered behavioural biometric authentication to prevent fraud across platforms like PayTM, BharatPe, GPay, UPI 123 Pay, NEFT and RTGS, ensuring real-time, secure transactions

The Unified Payments Interface (UPI) has revolutionized digital payments in India, offering unparalleled convenience and accessibility. However, its widespread adoption has also made it a prime target for increasingly sophisticated cyber and UPI fraud. Current authentication methods, often relying on PINs, can be compromised through social engineering, phishing, shoulder-surfing, or malware. While standard facial recognition is a step forward, it remains vulnerable to presentation attacks (spoofing) and cannot verify the user's intent or liveness at the moment of payment.

FacePay, a new authentication strategy powered by Faceoff AI's

Adaptive Cognito Engine (ACE), proposes a solution. FacePay integrates a rapid, multimodal, and behavioral biometric check directly into the UPI payment workflow. It ensures that a transaction is only authorized if a live, genuine, and authentically behaving user is present and actively approving the payment, thereby providing a powerful defense against modern UPI fraud.

FacePay is designed to be integrated as a final, seamless authentication step within any existing UPI application (e.g., Google Pay, PhonePe, Paytm, or a bank's native app).

Technical Workflow & Implementation Strategy:1. Instead of (or in addition to) the PIN entry screen, the UPI app activates the front-facing camera and triggers the integrated Faceoff Lite SDK.

2. The UI displays a simple instruction: "Please look at the camera to approve your payment of ₹[Amount]."

New Delhi: FaceOff has unveiled FaceGuard, an AI-powered solution designed to protect users from rising threats of video call scams such as Digital Arrest and Sextortion. With video calls increasingly...

New Delhi: FaceOff has unveiled FaceGuard, an AI-powered solution designed to protect users from rising threats of video call scams such as Digital Arrest and Sextortion. With video calls increasingly exploited by fraudsters impersonating officials or creating intimate threats

FaceGuard introduces a two-fold defense—replacing the user’s real face with a live digital avatar and analyzing the caller in real-time for signs of fraud. The core innovation behind FaceGuard is its proactive privacy and reactive intelligence. Users create a secure, expressive digital avatar during a one-time setup. This avatar mimics their facial expressions and movements using 3D mesh modeling and facial tracking, ensuring their real face is never exposed during unknown video calls. Simultaneously, the caller’s video feed is scanned using the Faceoff Lite engine to detect suspicious behaviors and synthetic media. FaceGuard’s Faceoff Lite engine is optimized for mobile and leverages advanced AI modules for real-time analysis. It detects deepfakes, screen replays, voice clones, and behavioral red flags—such as reading scripts, unnatural eye movement, and emotionally manipulative expressions. It also evaluates tone, posture, and gaze patterns to compute a “Trust Factor” score for the caller. Alerts and fraud warnings are shown through a subtle, non-intrusive overlay during the call. The system includes an on-device fraudster identity database, allowing users to store facial embeddings of confirmed scammers. If a known fraudster tries to contact the user again, FaceGuard will block the call before it begins. Optionally, users can anonymously contribute to a community-powered threat database, improving collective defense across the platform. During a call, if the AI engine detects threats, it alerts the user with a Trust Factor score and reasons (e.g., "Script Reading Detected"). The user can then choose to confirm and block the fraud, immediately terminating the call and updating their personal fraudster log. This privacy-first approach ensures all sensitive data remains local, unless the user consents to share anonymized threat signatures. FaceGuard is designed for flexible deployment—as a standalone mobile app or as an SDK for integration into platforms like Zoom, WhatsApp, Telegram, or Google Meet. This makes it ideal for both personal safety and enterprise use, especially in high-stakes virtual meetings where verifying identities and safeguarding participants is crucial. By combining privacy protection, AI-based scam detection, and community-driven defense, FaceGuard offers a comprehensive security layer against emerging video call threats. It empowers users to stay safe and anonymous, prevents misuse of facial footage, and enables early detection of sophisticated fraud attempts—all in real time. For more visit www.faceoff.world.

In our journey to build FaceOff, we initially explored hosting entirely on the cloud, evaluating AWS and Azure as potential platforms. With AWS, we found the costs to be prohibitively high. Their a...

In our journey to build FaceOff, we initially explored hosting entirely on the cloud, evaluating AWS and Azure as potential platforms.

With AWS, we found the costs to be prohibitively high. Their approach required us to develop strictly within their ecosystem, using their pre-built software stack. This created a long-term dependency, ensuring AWS would continue to generate recurring revenue from us indefinitely. While it fit into their business model, it did not align with our budgetary goals. We also incurred some financial losses during this phase. With Azure, the challenge was different. Their infrastructure lacked the capability to run our solution—an advanced multi-model AI setup requiring eight different AI engines to operate simultaneously. This made Azure an impractical option for our needs. We did not proceed with Google Cloud Platform (GCP) due to its inherent limitations—services and credits are only available if hosted on GCP infrastructure, and the cloud credits offered are minimal, serving as small incentives rather than a viable operational strategy. As a result, we decided to re-engineer FaceOff for a private cloud deployment—designing it to be truly cloud-platform-independent and template-agnostic. This ensures maximum flexibility, eliminates vendor lock-in, and allows our AI models to run seamlessly across diverse infrastructures without being tied to a single provider’s ecosystem. A Cloud-Platform-Independent and Template-Agnostic AI model is designed for seamless deployment across heterogeneous environments—including AWS, Microsoft Azure, Google Cloud Platform,Oracle Cloud Infrastructure( OCI) and on-premises infrastructure—without requiring significant reconfiguration or redevelopment. This portability is enabled through adherence to open standards, abstraction from vendor-specific dependencies, and encapsulation within containerized environments such as Docker, orchestrated via Kubernetes or equivalent platforms. The template-agnostic approach further decouples the model from fixed deployment blueprints, allowing integration with a variety of Infrastructure-as-Code (IaC) frameworks, CI/CD pipelines, and orchestration methods. Such an architecture mitigates vendor lock-in, increases operational flexibility, and optimizes scalability and cost efficiency across different deployment contexts.

FaceOff AI(FO AI), from FaceOff Technologies, is a multimodal platform for digital authenticity, deepfake detection, and behavioral authentication. FaceOff AI Lite to solves real-time analytics. Po...

FaceOff AI(FO AI), from FaceOff Technologies, is a multimodal platform for digital authenticity, deepfake detection, and behavioral authentication. FaceOff AI Lite to solves real-time analytics.

Powered by the Adaptive Cognito Engine (ACE), it fuses eight biometric and behavioral signals—including facial micro-expressions, posture emotions, voice sentiment, and eye movement—to generate real-time trust and confidence scores and emotional-congruence insights within seconds.

Behavioral biometrics authentication uses unique patterns of human behavior to verify identity, analyzing how individuals interact with devices. Unlike traditional methods like passwords, PIN which are static and vulnerable to theft, or physical biometrics like fingerprints, which rely on fixed traits, behavioral biometrics focuses on dynamic, context-driven actions.

Key advantages include:

Faceoff AI, incorporates behavioral authentication by analyzing cues like facial micro-expressions and voice sentiment, providing real-time trust scores for applications like online video KYC or fraud detection. This approach strengthens security in industries like banking and judiciary, where traditional methods fall short against sophisticated threats like deepfakes.

This enables real-time verification and fraud prevention across industries like banking, defense, judiciary, education, and smart cities.

By leveraging advanced facial recognition technology from Faceoff Al to transform ATM networks, enhancing both security and user experience to set a new benchmark in intelligent self-service banking.

Key features includes:

Faceoff AI tackles the growing digital authenticity crisis, where traditional security measures fall short against sophisticated deepfakes and synthetic fraud. Its real-time analytics empower organizations to make informed decisions quickly, enhancing security and trust in critical sectors.

Sector wise- Industries are going to get benefitted

Implementation of FOAI will prevent from the stampedes, managing dense crowds in confined spaces, identifying individuals under distress or posing a threat, ensuring the integrity of queues, and protecting critical infrastructure and VIPs.

Enhancing DigiYatra with Faceoff AI Stack: Toward Secure, Inclusive, and Deepfake-Resilient Air Travel

The Faceoff AI Solution Proposition:

This proposal details the application of Faceoff's Adaptive Cognito Engine (ACE), a sophisticated multimodal AI framework, to provide a transformative layer of intelligent security and management for analyzing real-time video (and optionally audio) feeds from existing and new surveillance infrastructure, Faceoff AI aims to provide security personnel and temple administration with:

This solution is designed with privacy considerations and aims to augment human capabilities for a safer and more secure pilgrimage experience.

Adaptive Cognito Engine (ACE) - Key Modules for FaceOff LIte

Trust Fusion Engine: Aggregates outputs into a "Behavioral Anomaly Score" or "Risk Index" for individuals/crowd segments, and an "Emotional Atmosphere Index" for specific zones.

Empower Your Banking Security Today with FO AI

Transform your ATM network with FaceOff AI—combining advanced facial recognition and a Biological Behaviour Algorithm (BBA) to elevate security and deliver a seamless, intelligent self-service experience.

By leveraging FaceOff AI’s facial recognition and Biological Behaviour Algorithm to upgrade ATM networks, strengthening security and UX while setting a new benchmark in intelligent self-service banking.

Deploy FaceOff AI with BBA to authenticate in seconds, deter fraud, and delight customers at every touchpoint.

Modernize ATMs with FaceOff AI and BBA for stronger protection and a superior user experience. Book a demo today.

FaceOff Lite Refers to a Lightweight Version of Faceoff AI. A lightweight variant designed for low-end systems without a GPU would align with its privacy-first, on-device processing architecture. FaceOff Lite can run in edge devices (CCTV, webcam etc.), simple Desktop and laptop, No need of GPU.

Deepfakes have rapidly shifted from fringe experiments to one of the most pressing global security challenges. Fueled by advances in generative AI, they can replicate voices, faces, and behaviors with...

Deepfakes have rapidly shifted from fringe experiments to one of the most pressing global security challenges. Fueled by advances in generative AI, they can replicate voices, faces, and behaviors with alarming precision—making it harder than ever to distinguish truth from manipulation.

The emergence of synthetic media platforms is significantly shaping the Deepfake AI landscape. What began as entertainment gimmicks has now expanded into fraud, political disinformation, cyber extortion, and digital harassment.

Advances in GANs (Generative Adversarial Networks) and diffusion models have made deepfakes easier, cheaper, and more convincing. What once required high-end computing clusters can now be done on laptops or even smartphones. Open-source code and pre-trained models have democratized access, accelerating both innovation and abuse.

The continuous refinement of GANs is expected to further elevate the quality of deepfake content, solidifying their role in the media landscape. With this, Deepfake AI technology is increasingly being integrated into content production processes across various sectors. In fact, it was started with celebrity hoaxes has now become a dangerous tool for fraud, political disinformation, cybercrime, and personal harassment, eroding public trust in digital media.

The threat is severe and widespread. Banks are targeted by sophisticated scams, politicians face fabricated speeches, and individuals suffer reputational harm from non-consensual synthetic content. As AI models become more powerful and accessible, creating deepfakes is now cheaper and easier than ever, allowing them to spread across the internet like a technological parasite.

98% of deepfakes are non-consensual porn, nearly all targeting women. In 2023, production surged 464% year-over-year, with top sites cataloging almost 4,000 female celebrities plus countless private victims.

Political deepfakes, though just ~2% of total, are rising fast—82 cases were recorded across 38 countries between mid-2023 and mid-2024, most during election periods, spreading fake speeches, endorsements, and smears.

Deepfakes are no longer confined to the easily accessible surface web. A much greater volume of this dangerous content resides within the deep web and dark web, hidden from public view and posing an even more insidious threat. Until now, a significant challenge has been accurately measuring the scale of this problem across all three layers of the internet.

Notably, the cryptocurrency sector has been especially hit, with deepfake-related incidents in crypto rising 654% from 2023 to 2024, often via fake endorsements and fraudulent crypto investment videos. Businesses are targeted frequently; an estimated 400 companies a day face “CEO impostor” deepfake attacks aimed at tricking employees.

To combat this, FaceOff Technologies has developed a groundbreaking solution called DeepFace. This advanced technology detects and maps deepfake videos across the entire web, providing unprecedented insight into their proliferation. By uncovering these fakes at scale, DeepFace is a crucial step toward protecting individuals, industries, and societies from the growing menace of synthetic media.

Deepfake-enabled fraud is causing significant financial damage, with losses projected to grow rapidly. In 2024, corporate deepfake scams cost businesses an average of nearly $500,000 per incident, with some large enterprises losing as much as $680,000 in a single attack.

The deepfake AI market itself is growing at a remarkable rate, projected to jump from an estimated $562.8 million in 2023 to $6.14 billion by 2030, a CAGR of 41.5%. This growth is primarily fueled by the rapid evolution of generative adversarial networks (GANs).

According to Deloitte, generative AI fraud, including deepfakes, cost the U.S. an estimated $12.3 billion in 2023, with losses expected to soar to $40 billion by 2027. This represents an annual increase of over 30%. The FBI's Internet Crime Center has also noted a surge in cybercrime losses, attributing a growing share to deepfake tactics. Globally, these scams are already causing billions in fraud losses each year.

Older adults are particularly vulnerable, with Americans over 60 reporting $3.4 billion in fraud losses in 2023 alone, an 11% increase from 2022. Many of the newer scams, such as impostor phone calls using AI-generated voices, are contributing to this rise. A notable incident involved a Hong Kong firm where an employee was tricked into transferring USD 25 million after a deepfake video call from a supposed CEO.

Increasing AI-generated porn: Recent cases involve deepfake pornographic images of Taylor Swift and Marvel actor Xochitl Gomez, which were spread through the social network X. However, deepfake porn doesn’t just affect celebrities.

( Rising demand for high-quality synthetic media is boosting deepfake AI adoption, alongside growing need for consulting, training, and integration services)

Every improvement in AI has made deepfakes more realistic and accessible. What used to require powerful computers can now be done on a smartphone, with open-source code further accelerating their spread. Deepfakes have metastasized from entertainment into dangerous domains:

Just like a biological parasite, deepfakes consume trust—the very foundation of digital communication. They exploit human psychology to deceive, manipulate, and profit. While detection tools are being developed, deepfakes constantly evolve to evade them.

A global "AI Take It Down Protocol" could help by enforcing rapid takedowns of verified deepfakes, mandating watermarking for AI-generated media, and establishing heavy penalties for malicious creators. This ongoing battle requires constant vigilance and adaptive defenses from governments, companies, and technologists alike.

Moving forward, Cybercriminals now exploit cloned voices to steal money, with deepfake fraud rapidly escalating against individuals and businesses worldwide.

Money laundering has become one of the world’s most pressing financial crimes, enabling organized crime, terrorism financing, tax evasion, and corruption. The UN estimates that 2–5% of...

Money laundering has become one of the world’s most pressing financial crimes, enabling organized crime, terrorism financing, tax evasion, and corruption.

The UN estimates that 2–5% of global GDP ($800 billion–$2 trillion) is laundered each year. With the rise of digital banking, cryptocurrency, and cross-border transactions, the complexity of detection and enforcement has multiplied.

Governments are stepping up efforts: the Financial Action Task Force (FATF) drives global standards; the EU’s new AML Authority (AMLA) will launch in 2025; the U.S. AML Act of 2020 strengthens reporting and corporate transparency; and India’s PMLA now extends to digital assets and fintech platforms.

Modern AML is powered by AI, machine learning, and federated learning, enabling smarter detection of suspicious patterns with fewer false positives.

Blockchain analytics track crypto transactions, while behavioral biometrics fight deepfakes, synthetic IDs, and mule accounts.

Global Market Projections (AML)

• The global AML market is expected to grow from USD 4.13 billion in 2025 to USD 9.38 billion by 2030, at a robust CAGR of 17.8%.

• Another forecast estimates the market to rise from USD 1.73 billion in 2024 to USD 4.24 billion by 2030, growing at 16.2% CAGR.

• Broader projections (including software and services) put the market at USD 4.48 billion in 2024, scaling to USD 13.56 billion by 2032 at 14.8% CAGR.

• A more optimistic scenario anticipates growth from USD 3.29 billion in 2023 to USD 19.05 billion by 2032, at 19.2% CAGR.

Software-Only Segment (AML Software)

• The AML software market alone is projected to expand from USD 2.04 billion in 2023 to USD 5.91 billion by 2032, at a 12.6% CAGR.

What’s Fueling This Growth?

1. Regulatory Pressure & Compliance

Stricter global regulations, growing enforcement, and a complex cross-border financial landscape are driving financial institutions to invest more in AML technologies.

2. Technological Advances

Integration of AI, machine learning, big data, and real-time analytics has improved detection capabilities, reducing false positives and operational costs.

3. Digital & Financial Ecosystem Expansion